Introduction

Securing data that’s transmitted over the internet is essential to protect sensitive information from being compromised by attackers. One of the most common ways to secure web traffic is through SSL, which encrypts data as it travels between clients and servers. However, SSL can also cause performance issues, mainly when multiple servers are used to handle incoming traffic. Load balancing with, e.g., an Azure Application Gateway is a common solution for distributing web traffic across multiple servers/backends and improving performance. In this blog post, I will briefly explain three common SSL techniques used in load balancing: SSL offloading, SSL passthrough, and SSL bridging.

Disclaimer: Some of this informations aren’t globally valid for every load balancer, during my research I came across products that, e.g., define SSL bridging differently and do not break up SSL traffic at all. So please take this blog post with a grain of salt and more of a concept of these techniques.

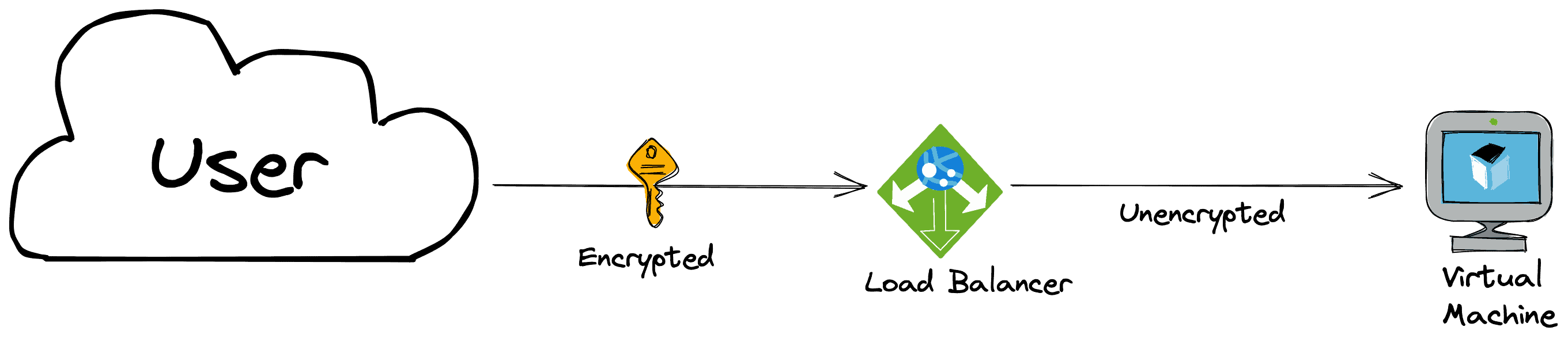

SSL-Offloading/SSL-Termination

This technique involves terminating SSL connections at the load balancer instead of passing the encrypted traffic to the backend servers. The load balancer decrypts the SSL traffic and forwards the unencrypted traffic to the servers/backends, reducing the server’s processing overhead (CPU) and improving performance.

Example: An e-commerce website with heavy traffic uses SSL offloading to improve website performance. The load balancer terminates the SSL connection, decrypts the traffic, and then forwards the unencrypted traffic to the backend servers, reducing the processing overhead on the servers.

SSL-Passthrough

With SSL passthrough, the load balancer passes SSL traffic through to the backend servers without terminating or decrypting it. This approach is practical when the backend servers need to access SSL-specific information, such as client certificates, or the whole traffic should be encrypted from end-to-end.

Example: A video conferencing service uses SSL passthrough to enable end-to-end encryption. By passing SSL traffic through to the backend servers without decrypting it, the video conferencing service can ensure that all communications between clients remain private.

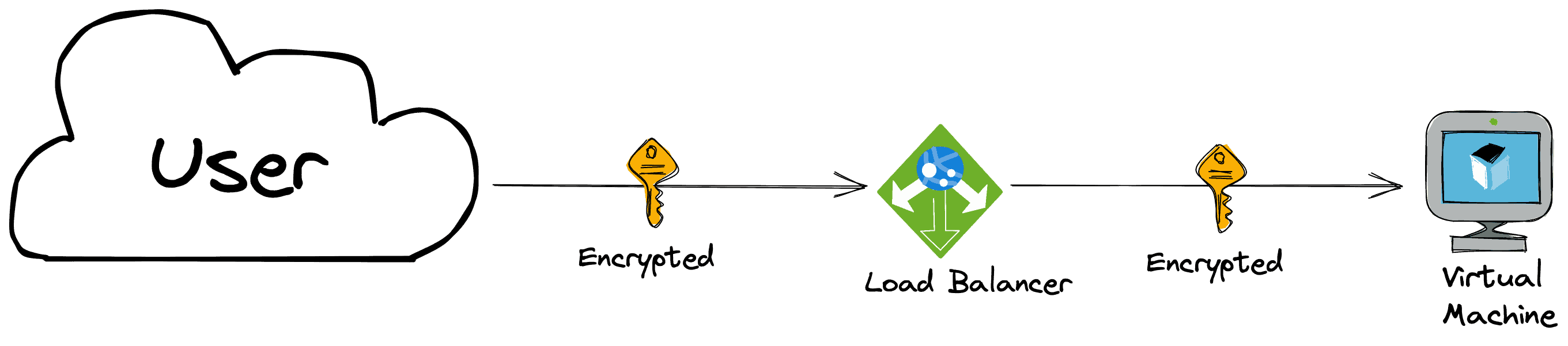

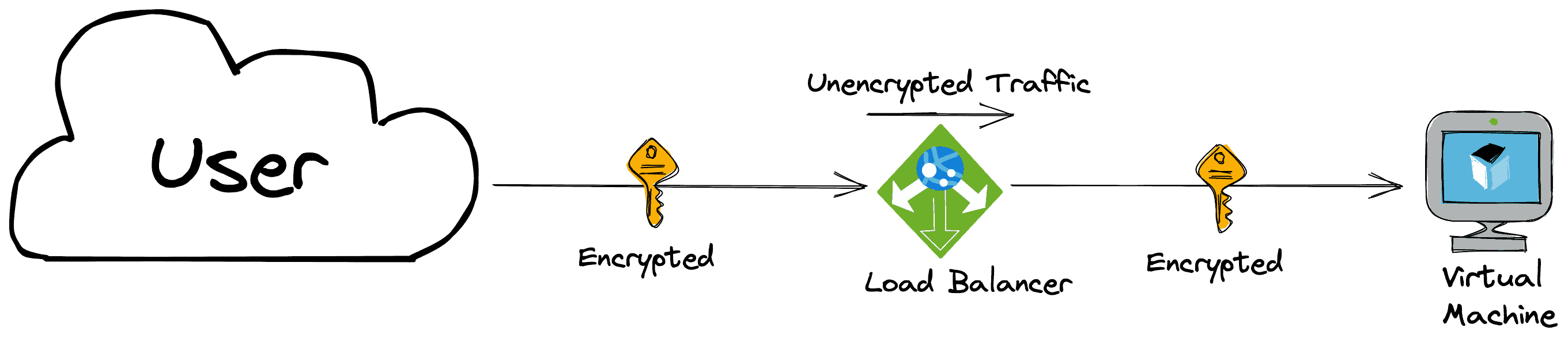

SSL-Bridging

In SSL bridging, the load balancer terminates the SSL connection from the client, decrypts the traffic, and then re-encrypts the traffic before sending it to the backend servers. It’s useful when you want to inspect the traffic, and you want to scan the traffic before it reaches your web server. You can also set exceptions for this decryption, e.g., for regulatory purposes.

Example: A software development company uses SSL bridging to secure data transferred between its clients and servers. By decrypting SSL traffic at the load balancer, the company can monitor and analyze the data for potential security threats. The load balancer then re-encrypts the traffic before forwarding it to the backend servers for processing.

Conclusion

In conclusion, SSL offloading, SSL passthrough and SSL bridging are three techniques that load balancers use to manage SSL traffic. SSL offloading decrypts SSL traffic at the load balancer and forwards unencrypted traffic to the backend servers, which can improve performance and simplify server management. SSL passthrough forwards encrypted traffic directly to the backend servers, which can improve security and support end-to-end encryption. SSL bridging decrypts SSL traffic at the load balancer, allows for monitoring and analysis of traffic, and re-encrypts the traffic before forwarding it to the backend servers. Each technique has advantages and disadvantages, and the appropriate technique depends on your specific needs.